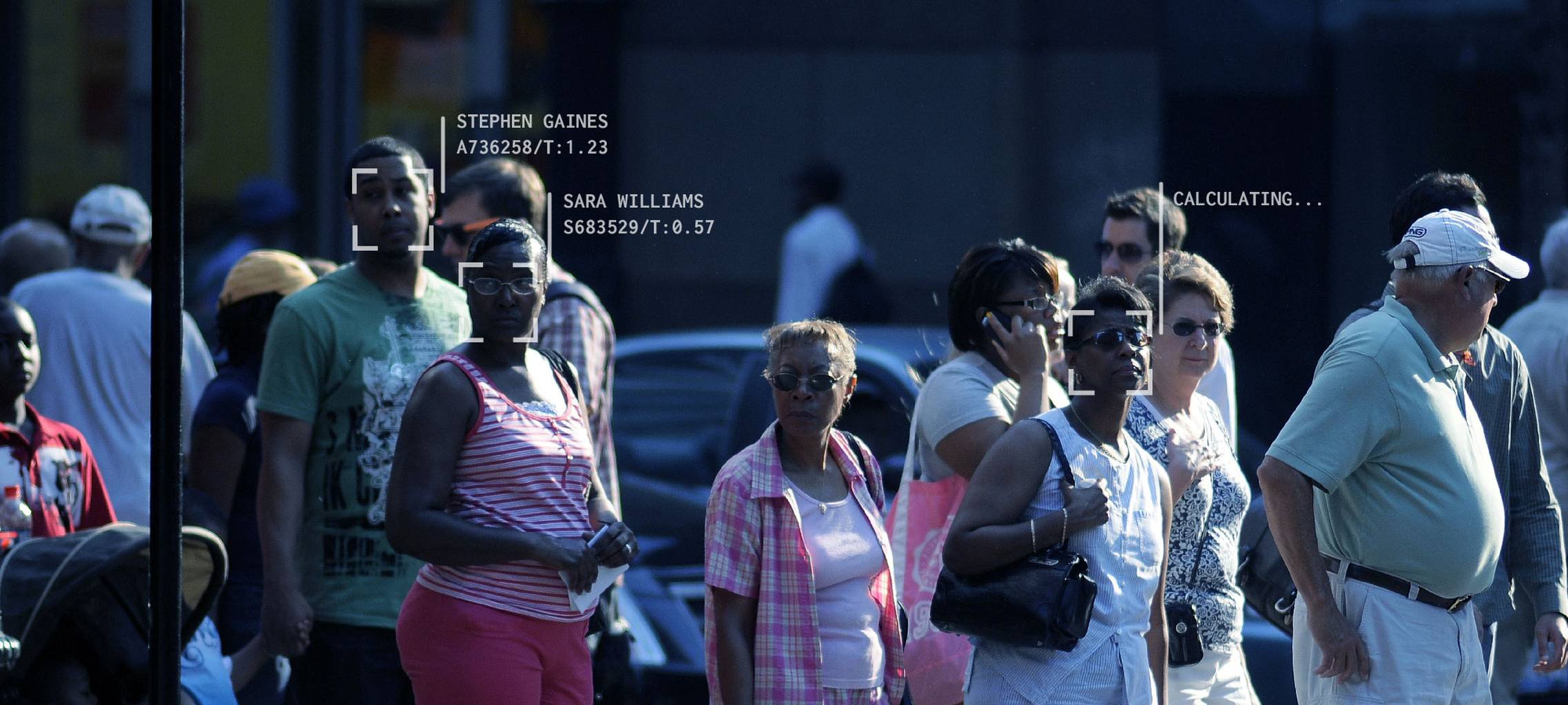

Over the last few years, we have seen a growing number of concerns around artificial intelligence and the implications it has on race. The most recent example that I can think of is FaceID not necessarily working for people of color. Implying that their skin is too dark for FaceID to detect it. The whole idea that someone’s skin color isn’t detectable by artificial intelligence is a bit scary. Where do these biases come from? Is it

MIT has been working on efforts to fight these racial biases when it comes to facial recognition systems by developing an algorithm that automatically takes away the biases from the training material. This will ensure that the data is accommodating a wider range of races. In this case, the code can scan a data set, understand the set’s biases, and then promptly resample it to ensure better representation for people – regardless of skin color.

The program that is conducting this research is MIT’s Computer Science and Artificial Intelligence Laboratory (CSAIL). According to CSAIL’s mission statement, their goal is to pioneer new approaches to computing that will bring about positive changes in the way people around the glove live, play and work. And that seems to be what they’re doing, doesn’t it? By removing biases that we know exist, this technology would level the playing field for facial recognition. Wouldn’t that be amazing?

An academic paper that was published in 2012, indicated that facial recognition systems from the vendor Cognitec performed 5 to 10 percent worse on people of color, than people who were white. Further, researchers have discovered that facial recognition systems that were developed in China, Japan and South Korea had difficulty distinguishing between people who were white, and East Asians. And in an area that we might not have even thought about artificial intelligence biases, a recent study found that smart speakers were 30 percent less likely to be able to understand non-American accents.

This is a huge problem! Which is why it’s great to see that MIT is investing in this kind of program through CSAIL. So what is the problem? To start, when datasets are created, it seems that they aren’t being vetted properly, which is what is leading to these biases. These datasets are being used in security and law enforcement, so its fundamentally imperative that these datasets are free from bias.

All that said, this technology won’t rule out all biases. But the results will be significant. In fact, this particular system reduced categorical bias by 60 percent without affecting its precision. Those are some pretty incredible statistics given that this is just the start. With any kind of technology, there is the idea that we need to build on what others have done, in order to make a better product. And that’s what I see happening here. This isn’t going to be perfect right out of the gate, but its better than it was and it’s going to help improve people’s lives.